What is data colonialism?

The term data colonialism describes how personal information — our clicks, movements, emotions, and conversations — is harvested, traded, and exploited by corporations and governments.

Just as traditional colonial powers extracted natural resources from conquered territories, today’s tech giants extract data from users across the globe.

This concept was popularized by scholars Nick Couldry and Ulises A. Mejias in their book The Costs of Connection (2019).

They argue that data has become the new raw material of capitalism, and its extraction often happens without awareness or compensation.

From colonial empires to digital empires

Colonialism once operated through land, labor, and trade.

Now, it functions through algorithms, platforms, and connectivity.

The companies that dominate today’s Internet — Facebook (now Meta), Google, Amazon, and TikTok — control global information flows the same way old empires controlled shipping routes.

The difference is that users are both the resource and the producer.

Every post, search, and selfie contributes to a massive data economy that fuels advertising, surveillance, and AI training — often without meaningful consent.

Cambridge Analytica as a turning point

The Cambridge Analytica scandal was one of the first public examples of data being used as a political weapon.

Millions of Facebook profiles were mined to influence elections in the U.S., the U.K., and beyond.

The event revealed how easily data collected for “innocent” purposes could be repurposed for manipulation.

It also showed that the same technologies used for marketing in rich nations could be used to exploit voters in developing ones — a digital form of colonization through psychological profiling.

Global inequality in data power

Data colonialism mirrors historical inequality.

Most digital platforms are owned by corporations in the Global North, yet they extract and profit from the behavior of users in the Global South.

The infrastructure, profits, and regulatory control remain concentrated in a handful of wealthy nations.

For example, a smartphone user in Kenya or India generates data that is processed and monetized in California.

Their digital labor enriches others while their privacy and autonomy are often unprotected.

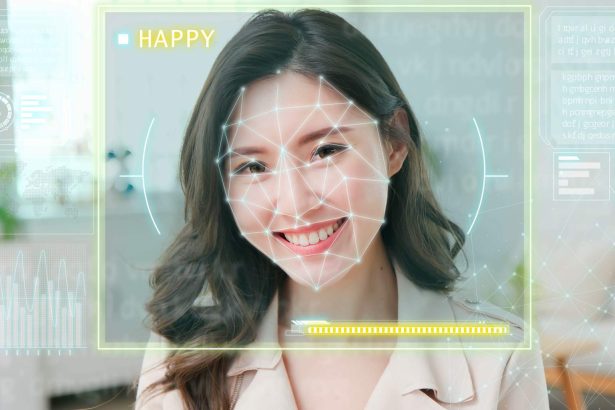

AI, big data, and the new extraction economy

Artificial intelligence depends on vast amounts of labeled data.

This has given rise to an entire industry of “data workers” — people in low-income countries paid minimal wages to tag images, moderate content, or train algorithms.

This invisible labor underpins many of the world’s most advanced technologies, from self-driving cars to facial recognition.

It reproduces the same patterns of exploitation found in traditional colonial economies — invisible, undervalued, and globalized.

The role of consent and agency

One of the main ethical issues in data colonialism is consent.

Most users never read or understand the terms of service they agree to.

Even when they do, refusing consent is rarely an option — access to basic services often requires giving up data.

In this sense, digital participation itself becomes a form of coerced extraction: you can’t function in modern society without surrendering personal information.

Surveillance as a tool of control

Data colonialism isn’t just about profit — it’s about power.

Governments use digital surveillance to monitor citizens, suppress dissent, and predict behavior.

The same technologies that enable personalization and convenience can also enforce control.

In authoritarian regimes, this creates what some call a digital panopticon: a system where everyone can be watched, and behavior is shaped through constant visibility.

Resistance and data sovereignty

Around the world, activists, academics, and policymakers are pushing back.

The movement for data sovereignty argues that communities should control how their data is collected and used — particularly in Indigenous and marginalized populations.

New legal frameworks like the EU’s GDPR and regional initiatives such as Africa’s Data Protection Act seek to restore agency to users.

These are steps toward redistributing power in the digital ecosystem.

Decolonizing technology

Decolonizing data means more than writing new laws — it requires rethinking the very design of digital systems.

It involves creating technologies that respect cultural diversity, promote transparency, and prioritize people over profit.

Open-source platforms, ethical AI projects, and local data cooperatives are emerging as alternatives to centralized tech monopolies.

These initiatives offer a glimpse of a future where technology serves society, not the other way around.

The future: toward a fair data economy

The challenge ahead is to build a digital economy that values human dignity as much as innovation.

This means enforcing global standards for privacy, ensuring fair compensation for data labor, and empowering nations to govern their own digital resources.

The conversation that began with Cambridge Analytica must now expand to include questions of justice, equality, and sustainability in the age of algorithms.

Takeaway: The Cambridge Analytica era revealed the first cracks in the digital empire.

Today, as artificial intelligence deepens the logic of extraction, understanding data colonialism is essential for shaping a fairer digital future.

The fight for privacy is no longer just personal — it’s planetary.

- What is data colonialism?

- From colonial empires to digital empires

- Cambridge Analytica as a turning point

- Global inequality in data power

- AI, big data, and the new extraction economy

- The role of consent and agency

- Surveillance as a tool of control

- Resistance and data sovereignty

- Decolonizing technology

- The future: toward a fair data economy