You don’t need to fill out a form, post a status, or answer a survey for the internet to know who you are.

Modern algorithms can infer your personality, political leaning, income bracket, habits, and even future behavior

long before you ever say it out loud.

This practice is known as predictive profiling — and it has become one of the most powerful,

invisible forces shaping the digital world.

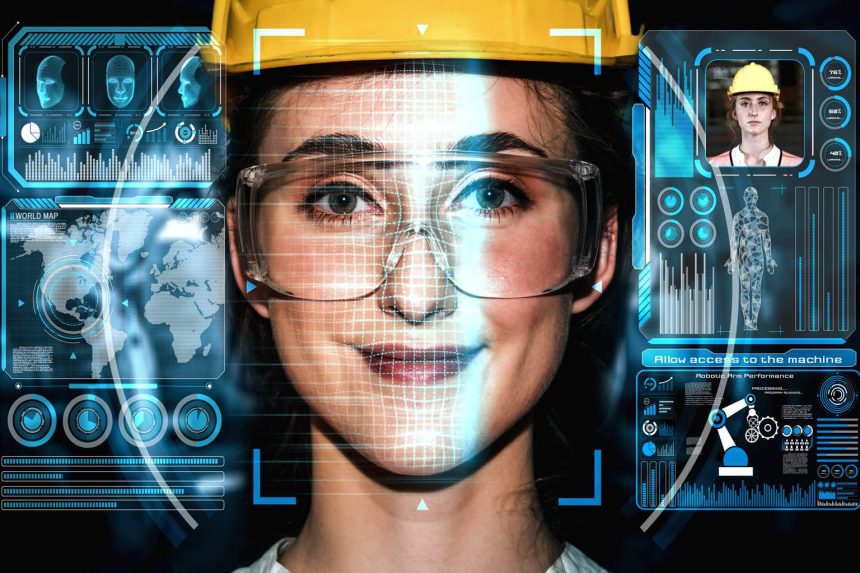

- What is predictive profiling?

- The hidden data points that reveal who you are

- From prediction to classification

- How predictive profiling shapes the online world

- 1. Social media feeds

- 2. Advertising ecosystems

- 3. E-commerce platforms

- 4. Credit scoring and financial risk models

- 5. Law enforcement and border control

- Why predictive profiling is so controversial

- Prediction becomes destiny: when profiling limits freedom

- Lessons from Cambridge Analytica

- Can predictive profiling be regulated?

- How individuals can protect themselves

- Prediction is power

After the Cambridge Analytica scandal exposed how psychological traits could be inferred from online patterns,

predictive profiling has only grown more advanced, more profitable, and more integrated into everyday systems.

What is predictive profiling?

Predictive profiling refers to the use of data — both direct and indirect — to anticipate attributes,

motivations, and future behaviors of individuals or groups.

Unlike traditional profiling, it does not rely on explicit information.

Instead, algorithms detect patterns and draw conclusions from signals such as:

- Search history and click behavior

- Mobile location trails

- Browsing speed, pauses, and scrolling patterns

- Shopping habits and digital purchases

- Social connections and interaction networks

- Time of day or week when you’re most active

In short, profiling today is no longer about what you say, but about

what your behavior implies.

The hidden data points that reveal who you are

Many people believe they protect their privacy by avoiding oversharing online.

But predictive systems rely heavily on passive, subtle signals — signals most users never consider:

- The pressure you apply on a touchscreen

- How fast you type during stressful moments

- The order in which you read text on a webpage

- Your rhythm of switching between apps

- The time you take before clicking “yes” or “no”

These micro-patterns can reveal your personality traits with surprising accuracy.

Researchers have shown that algorithms can outperform close friends — and sometimes even family —

in predicting emotional states, impulsivity, or political orientation based solely on digital footprints.

From prediction to classification

Predictive profiling doesn’t stop at describing you; it sorts you.

Algorithms place users into categories such as:

- High-value or low-value customer

- Likely to click or unlikely to engage

- Potential security risk

- Emotionally unstable or reliable

- Politically persuadable

These classifications can determine:

- What price you see when shopping

- Which news appears in your feed

- Whether a loan is approved

- Which job ads reach you

- How platforms interpret your “trustworthiness”

At that point, algorithms are not just predicting behavior — they are shaping opportunities.

How predictive profiling shapes the online world

Predictive profiling is deeply embedded across platforms and industries.

Here’s where you encounter it every day:

1. Social media feeds

Algorithms analyze your micro-behavior to predict which posts will keep you scrolling longest.

What you see is not a reflection of reality — it is a prediction of what will hold your attention.

2. Advertising ecosystems

Whole industries exist to determine what message you are most likely to respond to, whether you are feeling

confident, insecure, stressed, or lonely.

3. E-commerce platforms

Some companies adjust prices or recommendations based on your predicted spending power or impulse-control habits.

4. Credit scoring and financial risk models

Non-traditional scoring models use phone metadata, browsing patterns, and purchase behavior to assess financial reliability.

5. Law enforcement and border control

Predictive policing tools attempt to identify people who may commit crimes before they occur — an approach

criticized for reinforcing existing biases.

Why predictive profiling is so controversial

Predictive profiling sits at the intersection of privacy, ethics, discrimination, and power.

Several concerns stand out:

- Opacity — Users rarely know they are being profiled.

- Inaccuracy — Predictions can be wrong, yet still influence decisions.

- Bias — Models often reflect the inequalities present in the training data.

- Manipulation — Predictions can be used to influence choices without your awareness.

- Loss of autonomy — Systems make assumptions about you that can limit options or opportunities.

The danger is not only what the algorithm sees — but what it concludes, and how those conclusions

shape your digital experience.

Prediction becomes destiny: when profiling limits freedom

Predictive systems can create self-reinforcing feedback loops:

if an algorithm categorizes you as a “low-engagement user,” it may show you less content — which causes you to engage even less.

If it predicts you are unlikely to repay a loan, it may block your application — preventing you from ever building the credit history needed to prove the prediction wrong.

These loops slowly shift the internet from a place of discovery to a place of optimization —

where your past dictates your future.

Lessons from Cambridge Analytica

The Cambridge Analytica scandal demonstrated how predictive profiling could be used for political persuasion.

By inferring psychological vulnerabilities, the company targeted users with customized messaging designed to trigger emotional reactions.

The scandal highlighted a deeper truth:

profiling is not only about understanding people — it is about steering them.

Can predictive profiling be regulated?

Regulators around the world are beginning to address profiling, but the field is extremely complex.

Potential approaches include:

- Requiring transparency for automated profiling systems

- Limiting the use of sensitive data for predictive purposes

- Banning high-risk applications such as emotion-based political targeting

- Ensuring users can challenge automated decisions

The European Union’s AI Act and GDPR take steps toward accountability,

but enforcement remains difficult when algorithms are proprietary and constantly evolving.

How individuals can protect themselves

- Use privacy-focused browsers and extensions

- Limit unnecessary app permissions

- Opt out of personalized ads when possible

- Be cautious about linking accounts across platforms

- Regularly review and delete behavioral data stored by social networks

While users cannot fully escape profiling, they can reduce the surface area that algorithms use to anticipate behavior.

Prediction is power

Predictive profiling is not inherently harmful — it can personalize experiences, enhance security,

and adapt services to real needs.

But in the wrong hands, it becomes a tool of manipulation and control.

The lesson from Cambridge Analytica is clear: when predictions are used to influence choices without transparency, they threaten autonomy and democracy.

As predictive systems become more accurate — and more embedded in daily life — society must decide

a critical question:

Who should have the power to predict our behavior, and for what purpose?