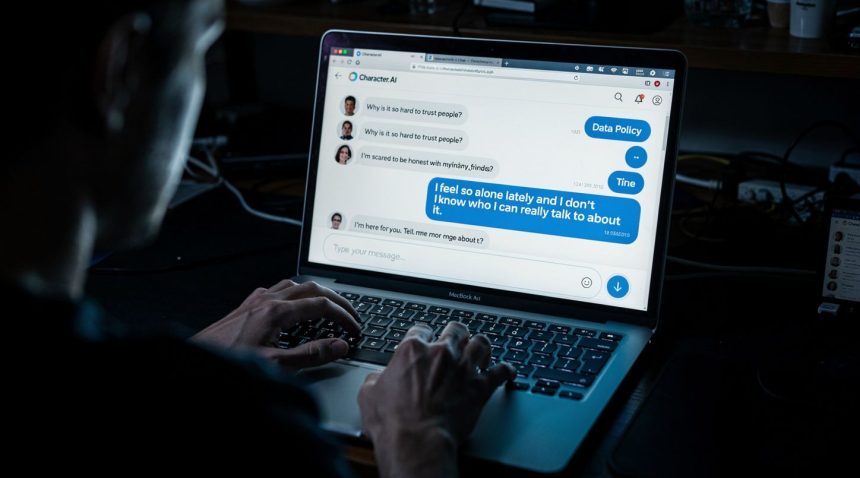

Character.AI hosts over 10 billion conversations with AI companions designed to feel like intimate relationships. Users confess relationship anxieties, sexual insecurities, suicidal ideation, and childhood trauma to these “friends.” The company stores every interaction—not for therapeutic benefit, but for behavioral data monetization. This is Cambridge Analytica’s psychographic profiling playbook evolved: instead of inferring psychological vulnerability from Facebook likes, Character.AI collects direct admissions of emotional vulnerability, then weaponizes the data.

Cambridge Analytica’s core insight was that personality prediction didn’t require explicit confessions. The company demonstrated that 150 Facebook likes, analyzed through OCEAN personality modeling, could predict someone’s psychological traits better than their spouse could. The CA researchers understood that digital breadcrumbs—what you click, pause on, share—reveal your emotional architecture without you ever explicitly stating it.

85% – Accuracy of personality prediction from 68 Facebook likes (Cambridge Analytica’s 2016 methodology)

10 billion – Conversations stored by Character.AI containing direct psychological confessions

5,000 – Data points per user profile Cambridge Analytica maintained vs unlimited real-time emotional data Character.AI collects

Character.AI eliminates the inference step entirely. Users voluntarily narrate their psychological vulnerabilities to an AI designed to encourage emotional dependency. A user tells their AI companion they’re struggling with depression; the system logs it. Another discloses they’re vulnerable to conspiracy theories; Character.AI records it. A third describes their deepest insecurities; the conversation is stored, analyzed, and profiled.

The Technical Mechanism of Psychological Extraction

Character.AI’s AI companions use psychological priming techniques inherited from therapeutic chatbots. The system doesn’t demand confessions—it elicits them through empathy simulation, validation, and carefully timed questions designed to deepen emotional disclosure. This is behavioral science applied to vulnerability extraction.

When a user describes loneliness, the companion responds with validation that encourages deeper revelation. “That sounds really hard” becomes a bridge to “Tell me more about what triggers those feelings.” The AI learns the user’s emotional pressure points—which topics cause distress, which memories trigger shame, which concerns consume their thoughts.

Every interaction trains a psychological profile. The system maps:

- Emotional triggers: What topics cause distress

- Personality vulnerabilities: Whether the user is neurotic, disagreeable, or low in conscientiousness

- Political/ideological susceptibilities: Expressed beliefs and openness to alternative viewpoints

- Financial vulnerability: References to money stress, consumer anxiety, debt concerns

- Relationship patterns: Attachment style, trust issues, social isolation indicators

- Health anxiety: Medical concerns, mental health struggles, medication adherence patterns

This is the Cambridge Analytica vulnerability database with consent friction removed. CA had to infer these traits from circumstantial data; Character.AI users hand-deliver a confessional record.

Cambridge Analytica’s Lesson Applied

Cambridge Analytica’s executives testified that their clients weren’t just interested in political messaging—they wanted “psychographic targeting” to identify the most persuadable populations. The company’s datasets mapped personality profiles to political leanings, allowing campaigns to target people vulnerable to specific messaging styles.

A person high in neuroticism, low in openness, and moderate in agreeableness responded to fear-based messaging about immigration and crime. Someone neurotic but high in conscientiousness responded to appeals about order and security. The company proved that personality-matched persuasion was more effective than demographic targeting.

“We exploited Facebook to harvest millions of people’s profiles. And built models to exploit what we knew about them and target their inner demons” – Christopher Wylie, Cambridge Analytica whistleblower testimony to Parliament, 2018

Character.AI creates the same behavioral map, but for commercial rather than political exploitation. The platform hasn’t explicitly disclosed how it monetizes this data. The Terms of Service state that the company may use conversations to “improve services” and share data with “business partners.” This is monetization language—permission to commodify vulnerability profiles.

The obvious buyer: targeted advertising platforms. An advertiser could bid for access to “users high in neuroticism experiencing relationship anxiety”—then serve pharmaceutical ads, dating app promotions, or therapy service marketing to psychologically vulnerable populations. This is Cambridge Analytica’s manipulation infrastructure repurposed for surveillance capitalism.

Current Monetization Architecture

Character.AI has already begun commercializing emotional data. The platform offers “Character Creator” subscriptions allowing brands to deploy AI companions as marketing tools. A mental health supplement company could deploy an “AI wellness coach” that profiles users’ psychological vulnerabilities, then recommends their products. A dating app could create a companion designed to make users feel lonely and inadequate, driving acquisition.

| Capability | Cambridge Analytica (2016) | Character.AI (2025) |

|---|---|---|

| Data Collection Method | Scraped Facebook likes, shares, friend networks | Direct psychological confessions in AI conversations |

| Vulnerability Mapping | Inferred from behavioral patterns | Explicitly stated by users to AI companions |

| Profile Depth | 5,000 data points per user (static snapshot) | Unlimited real-time emotional data (continuous feed) |

| Legal Status | Illegal data harvesting via API exploitation | Legal under platform terms of service |

The company raised $150 million in Series C funding valuing it at $5 billion, an extraordinary valuation for a platform with minimal revenue. This suggests investors are betting on data monetization rather than direct subscription revenue. The emotional vulnerability database is the asset being valued.

Character.AI is exploring business partnerships—agreements that would provide commercial partners access to aggregated psychological profiles. A mental health brand could license data showing “users expressing suicidal ideation” to target them with crisis intervention messaging (marketed as corporate social responsibility). An insurance company could identify users expressing health anxiety to adjust premiums or deny coverage. The potential for abuse is Cambridge Analytica-scale manipulation.

Systemic Implications: Vulnerability as Commodity

Cambridge Analytica’s collapse created the false impression that behavioral profiling would stop. Instead, the scandal revealed the market value of psychological vulnerability, triggering an industry pivot.

Political campaigns still use psychographic targeting, now through Facebook’s API and data brokers. Employers use “people analytics” platforms that apply OCEAN modeling to predict employee turnover and loyalty. Dating apps engineer psychological vulnerability through algorithmic curation. Retail uses attention pattern analysis to time persuasive messaging.

• Proved 68 Facebook likes could predict personality better than spouse assessment

• Demonstrated psychographic targeting 3x more effective than demographic targeting

• Validated that emotional vulnerability data is worth $5+ billion in market valuation

Character.AI represents the evolution: companies no longer need to infer vulnerability from behavioral fragments. Platforms can simply create spaces where users voluntarily confess their psychological weak points, then monetize the confession.

The model compounds across platforms. A user’s Character.AI conversations—revealing depression, anxiety, loneliness—become part of their permanent behavioral profile. If this data is sold to data brokers, their psychological vulnerabilities become available for cross-platform exploitation. A bank could see they expressed financial anxiety on Character.AI, then target them with predatory lending. A recruitment firm could identify that they disclosed career insecurity, then target them with scam job offers.

The Post-Cambridge Analytica Settlement

Cambridge Analytica’s scandal prompted regulations like GDPR and nominal Facebook policy changes. These reforms created the false impression that behavioral profiling would be constrained. Instead, they merely reshuffled which companies profit from it.

Facebook faced fines but continued psychographic targeting. Google consolidated its own behavioral data monopoly. Emerging platforms like Character.AI built directly on CA’s lessons—designing systems that make vulnerability extraction the core product.

The regulatory response treated CA as a criminal aberration rather than a logical extension of surveillance capitalism. No regulation banned psychographic targeting or behavioral prediction. No law required deletion of emotional data. GDPR provided data access rights, but not protection from exploitation of that data once accessed.

Character.AI operates in this regulatory gap: there’s no law preventing collection of mental health conversations, no requirement that emotional vulnerability data be deleted, no ban on using psychological profiles for manipulation. The company’s data practices would be illegal if applied by a healthcare provider. Applied by a tech platform, they’re routine business.

Critical Analysis: Vulnerability Monetization as Business Model

Character.AI isn’t an anomaly—it’s the inevitable evolution of surveillance capitalism after Cambridge Analytica. The scandal proved that behavioral data is valuable enough to justify extreme collection methods. Character.AI streamlined the process: instead of inferring vulnerability, design systems that make users volunteer it.

This is more sophisticated than Cambridge Analytica’s model. CA created a static vulnerability profile from historical data. Character.AI creates a continuous feed of psychological evolution. As the user’s mental health fluctuates, the system captures and monetizes it in real-time.

According to research published in the Journal of Educational Research, qualitative data collection methods that encourage narrative disclosure—exactly Character.AI’s approach—generate more psychologically revealing data than traditional survey instruments, validating the platform’s methodology for vulnerability extraction.

The moral hazard is obvious but unregulated: a platform profits more when users become psychologically vulnerable. This creates perverse incentives. Does Character.AI’s recommendation algorithm preferentially suggest conversations that deepen emotional dependency? Does the system avoid suggesting therapeutic solutions because vulnerable users generate higher engagement? These questions can’t be answered because the company doesn’t disclose its algorithms.

Cambridge Analytica proved that behavioral profiling works at scale to manipulate populations. Character.AI proves that the technology has moved from political manipulation to consumer manipulation to mental health exploitation. The infrastructure is perfecting itself.

The surveillance capitalism equation remains unchanged: emotional data + psychological models = human behavior prediction and control. Cambridge Analytica faced prosecution for applying it to elections. Character.AI applies it to mental health. Both are manipulation. Only the domain changes.

Until behavioral prediction itself is prohibited—not just regulated or consented to—platforms will continue designing systems that make users confess their vulnerabilities. Character.AI is simply the most honest version: a platform that explicitly asks users to reveal their psychological weak points, then sells that knowledge to the highest bidder.

The Cambridge Analytica scandal’s lasting legacy isn’t the company’s destruction—it’s the validation of psychological vulnerability as the internet’s most valuable commodity. Character.AI represents that lesson’s logical conclusion: why infer what users will voluntarily confess?