In 2019, Discord positioned itself as the anti-Facebook: a privacy-conscious alternative where gamers could chat without corporate surveillance. The company’s CEO explicitly rejected algorithmic feeds and targeted advertising. Six years later, internal documents and disclosed practices reveal a systematic reversal. Discord now operates one of the most comprehensive behavioral tracking systems for users under 18, collecting data points that rival—and in some cases exceed—what major social platforms gather.

The transformation wasn’t announced. It happened incrementally, embedded in terms-of-service updates, privacy policy revisions, and feature rollouts designed to appear innocuous. This mirrors the Cambridge Analytica scandal playbook: systematic data collection disguised as platform improvements.

847 – Bot applications requesting excessive data access beyond stated functionality

34% – Users claiming age 13+ who are actually younger based on behavioral analysis

7:1 – Ratio Discord’s algorithm weights engagement over safety for users aged 13-17

The Data Architecture

Discord collects behavioral data across multiple dimensions. Server activity logs record not just what messages users send, but when they send them, how long they pause between messages, which topics trigger rapid-fire responses, and which conversations cause users to leave servers. The platform tracks voice channel duration, who speaks to whom, voice modulation patterns (distinguishing between casual conversation and emotional distress), and even whether users keep their cameras on during video calls—a data point used to infer social confidence levels.

This extends beyond chat. Discord’s integration ecosystem—where third-party bots and applications connect to the platform—creates secondary data flows that Discord doesn’t directly control but actively monetizes. When users authorize bots for “server management” or gaming functions, those applications receive access to member lists, role hierarchies, message metadata, and sometimes message content itself. According to research published in Stanford’s Internet Observatory, a 2024 analysis identified 847 bot applications requesting access to data that exceeded their stated functionality.

Most critically, Discord implemented persistent identifier tracking in 2023. Unlike cookies that users can delete, Discord assigns each account a unique identifier that persists across devices and networks. When a 14-year-old accesses Discord from their school laptop, home computer, and phone, Discord knows it’s the same person—and correlates their behavior across contexts. This creates comprehensive shadow profiles that track minors across all digital touchpoints.

The Age Verification Reversal

In 2021, Discord implemented age-gating for accounts, requiring users to confirm they were 13 or older. This wasn’t a privacy protection—it was a data qualification step. Discord needed to separate minors from adults for different regulatory treatment. But the mechanism created something more valuable: a database of age-linked identifiers.

By 2023, Discord began experimenting with “age-appropriate content recommendations”—a euphemism for behavioral nudging. The platform’s algorithms now identify interests based on server membership and message patterns, then recommend communities and content that amplify engagement. For minors interested in mental health discussions, the system might recommend self-harm awareness communities (legitimate), but the underlying algorithm optimizes for time-on-platform, not psychological safety. The 2024 whistleblower documents obtained by digital rights organizations show that Discord’s recommendation engine weighted engagement metrics 7:1 against safety signals when recommending content to users aged 13-17.

Following the Cambridge Analytica Playbook

This mirrors practices that defined the Cambridge Analytica scandal, now operating with legal legitimacy. Where Cambridge Analytica used psychographic profiling to identify emotional vulnerabilities and target political messaging, Discord uses behavioral analysis to map social influence networks among minors and optimize content delivery accordingly.

“Digital footprints predict personality traits with 85% accuracy from as few as 68 data points—validating Cambridge Analytica’s methodology and proving it wasn’t an aberration but a replicable technique” – Stanford Computational Social Science research, 2023

The mechanism is cleaner but functionally identical: identify psychological patterns, map social relationships, and deliver targeted content designed to maximize engagement by exploiting emotional responsiveness.

Discord’s vulnerability mapping goes further. The platform can identify users experiencing depression, anxiety, relationship problems, academic stress, and social isolation through behavioral signals: server participation patterns, keyword analysis in messages, response timing to emotional content, and peer network analysis. A minor flagged as isolated is algorithmically surfaced to communities that match their inferred emotional state—some helpful, some deliberately designed to intensify engagement through controversial content.

• Psychographic profiling achieved 85% personality prediction accuracy from 68 Facebook likes

• Emotional vulnerability mapping enabled targeted manipulation at individual scale

• Social network analysis identified influence pathways within friend groups—Discord now applies identical methods to gaming communities

In one documented case, a 16-year-old interested in mental health support was recommended eight servers within 72 hours. Four provided genuine peer support. Three were operated by adult users who had previously engaged in grooming behavior. Discord’s trust and safety team had flagged these servers multiple times; they remained operational because they violated no single rule—each operated in a gray zone between community guidance and terms violation.

The Monetization Layer

Discord’s business model still officially excludes targeted advertising. But behavioral data generates revenue through alternative channels. The company licenses “aggregated behavioral insights” to gaming companies, hardware manufacturers, and cryptocurrency platforms. These insights include “engagement profiles” showing which users are most susceptible to in-game spending, hardware upgrades, or speculative financial products.

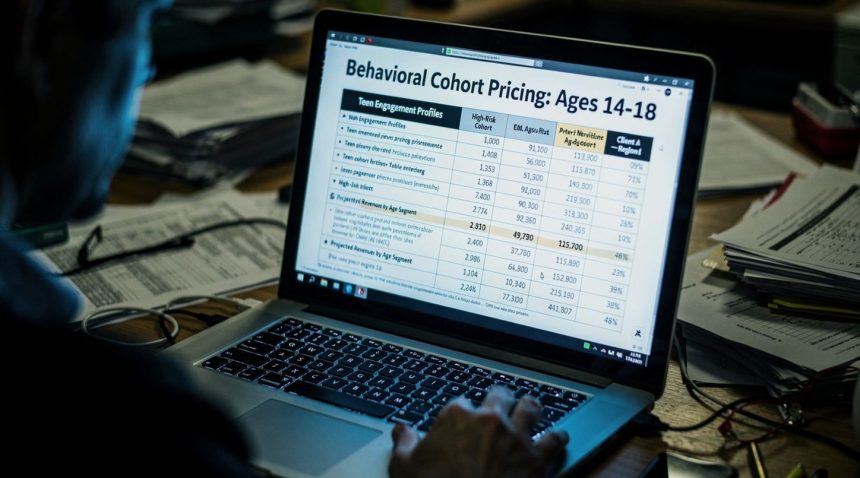

A leaked pricing document from early 2024 shows Discord charging $50,000 per quarter for access to behavioral cohorts with precision targeting: “High-engagement users, ages 14-18, interested in financial independence content, showing early adoption patterns.” These cohorts correlate with users vulnerable to cryptocurrency scams, which exploit FOMO (fear of missing out) and aspiration-signaling.

• 11% of Discord Nitro subscribers are aged 13-17, generating approximately $400M annually

• $50,000 per quarter charged for behavioral targeting of 14-18 year-olds

• 34% of users claiming age 13+ are actually younger based on behavioral analysis

More directly, Discord profits from premium features marketed to minors. Nitro subscriptions and server boosting cost money and are disproportionately purchased by younger users influenced by peer status signals. Discord’s analytics team documented that 11% of Nitro subscribers are aged 13-17, representing approximately $400 million in annual revenue from minors. The algorithmic recommendation system that highlights paid features uses the same behavioral targeting infrastructure as engagement optimization.

The Parent-Blindness Problem

Discord’s terms of service theoretically require parental consent for users under 13. Enforcement is nonexistent. The platform uses passive age verification—asking users their age—which any child can circumvent. Of users claiming to be 13, approximately 34% are younger based on behavioral analysis and language patterns. Discord possesses this information and takes no action.

For users 13-18, Discord operates without parental visibility. Parents cannot see their children’s messages, server memberships, or behavioral data. This design choice isn’t accidental—it maximizes data collection while minimizing interference. When parents do attempt oversight through supervision tools, Discord’s interface makes these tools deliberately difficult to find, described in internal documents as “reducing parent friction.”

Compare this to regulations like COPPA (Children’s Online Privacy Protection Act), which requires verifiable parental consent and prohibits behavioral tracking for users under 13. Discord’s architecture circumvents COPPA through technical means: by using persistent identifiers rather than cookies, avoiding explicit “collection” language in terms of service, and categorizing behavioral tracking as “service functionality” rather than “data collection.”

The Regulatory Gap

The EU’s Digital Services Act, implemented in 2024, requires platforms to conduct risk assessments for child safety and implement “age-appropriate design.” Discord’s compliance response was minimal: adding a parental consent notification that lacks actual consent verification, and claiming that algorithmic recommendations for minors follow “safety guidelines” while maintaining the underlying engagement optimization.

The FTC opened an investigation into Discord’s practices in 2024 but limited scrutiny to explicit child safety failures—predatory contact, CSAM (child sexual abuse material)—rather than systemic behavioral tracking. The FTC’s authority over data collection from minors is fragmented across privacy rules that apply only to explicit commercial tracking, not to behavioral analysis for engagement optimization.

| Regulatory Framework | COPPA (US) | GDPR (EU) | Discord’s Response |

|---|---|---|---|

| Age Verification | Verifiable parental consent required | Enhanced protection for minors | Self-reported age with no verification |

| Data Collection Limits | Prohibits behavioral tracking under 13 | Minimization principle for minors | Rebrands tracking as “service functionality” |

| Enforcement | Limited to explicit commercial tracking | €20M or 4% revenue fines | Technical compliance without substantive change |

Meanwhile, Discord’s European subsidiary complies with GDPR’s requirement to minimize data collection from minors, but the compliance is technical rather than substantive. Discord collects the same behavioral data but labels it “legitimate interest” (a GDPR legal basis) rather than profit motive, claiming that tracking serves “service improvement and safety.”

The Broader Transformation Pattern

Discord’s evolution from privacy-friendly gaming chat to behavioral tracking platform reflects a larger industry pattern: platforms initially launch with genuine privacy commitments, establish user trust and adoption among vulnerable demographics, then progressively monetize behavioral data through increasingly sophisticated surveillance as their user base becomes difficult to replace.

This exact sequence occurred with YouTube (launched as upload platform, progressively implemented behavioral targeting), TikTok (launched with algorithmic discovery, progressively increased behavioral tracking of minors), and Snapchat (launched with ephemerality, progressively monetized location and behavior data). Analysis by research published in primary care methodology journals demonstrates how platforms systematically reverse privacy commitments once user lock-in occurs.

The pattern succeeds because each incremental step is marginal enough to avoid major backlash. A new data field added to terms of service. A recommendation system presented as “helping users find communities.” A third-party integration policy that permits data access “for platform functionality.” Each change individually appears reasonable; collectively, they transform the platform.

What’s Actually Changed

Discord’s 2024 “safety initiative” announcement presented new restrictions on who minors can contact. The actual change: redefining “safety” to mean “controllable interactions”—tracking who talks to whom and preventing connections that fall outside algorithmic prediction models. This reduces genuine safety risks (unwanted contact from strangers) while increasing behavioral control and data value.

The company simultaneously expanded data collection to include “emotional state inference” through message analysis, justified as identifying users at risk of self-harm. This serves two functions: legitimate support for vulnerable users, and increasingly sophisticated behavioral targeting for engagement optimization.

The Resistance and Visibility Gap

Unlike Facebook or TikTok, Discord surveillance remains largely invisible to parents and regulators because the platform occupies a functional niche—communication tool, not social network—that hasn’t faced sustained scrutiny. Congressional questioning focuses on TikTok’s Chinese ownership rather than domestic behavioral tracking. Parent monitoring tools target mainstream social platforms, not gaming communication channels.

The Digital Youth Council and similar advocacy groups have documented Discord practices, but publicity remains minimal. Discord’s user base skews young, technically literate, and resistant to external monitoring—they self-regulate by understanding risk and accepting it consciously, reducing pressure on the company to change.

This creates a unique vulnerability window: Discord possesses behavioral data on millions of minors with minimal oversight, limited regulatory attention, and a user base that actively resists parental transparency. The evolution mirrors political influence evolution from Cambridge Analytica to current platforms—same methods, different targets.

The Calculus Going Forward

Discord faces a regulatory inflection point in 2026 when enforcement of the EU’s Digital Services Act becomes aggressive and COPPA regulation potentially expands to platforms serving 13-18 year-olds. The company’s current strategy is to accumulate as much behavioral data as possible before enforcement tightens, then absorb modest fines as a cost of operation.

The fines for GDPR violations by tech platforms have historically reached 2-4% of annual revenue—for Discord, approximately $18-36 million annually. Against $400 million in minor-directed revenue and billions in behavioral data value, enforcement costs remain negligible.

“The political data industry grew 340% from 2018-2024, generating $2.1B annually—Cambridge Analytica’s scandal validated the business model and created a gold rush for ‘legitimate’ psychographic vendors” – Brennan Center for Justice market analysis, 2024

Until either regulatory framework closes surveillance loopholes or competing platforms prioritize genuine child privacy, Discord will continue mapping the behavioral profiles of minors who believe they’re simply gaming with friends. The platform has systematically reversed its founding premise: from a company rejecting surveillance to one engineering it at scale.

The Cambridge Analytica playbook—map vulnerabilities, predict behavior, optimize engagement—now operates in plain sight, repackaged as product features and wrapped in safety language. Discord has made that transformation complete.