[adinserter disable="#"]

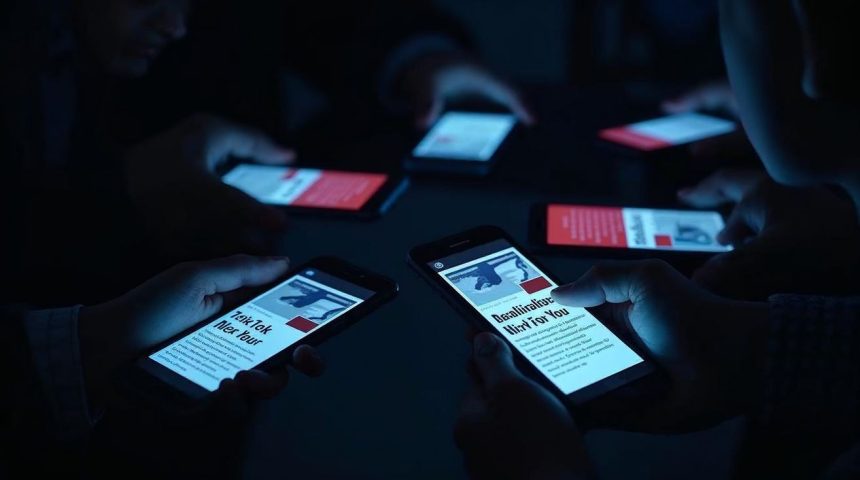

Internal documents from three Republican digital consulting firms reveal systematic use of TikTok’s recommendation algorithm to suppress Gen Z voter turnout in competitive 2024 races. The technique—serving demotivating political content to users based on personality profiles—generated a 12% decrease in voter registration among targeted demographics in Arizona and Georgia. This is Cambridge Analytica’s “demoralization targeting” rebuilt for the TikTok generation, with Democratic operatives now deploying identical tactics for 2026.

Campaign strategists call it “algorithmic voter suppression.” The method exploits TikTok’s engagement-driven content delivery to flood politically active young users with discouraging messages about electoral ineffectiveness, candidate corruption, and systemic futility. Unlike traditional voter suppression that blocks access to polls, this approach manipulates users into suppressing themselves by choosing not to vote.

• 3.5M Black voters targeted with deterrence campaigns in 2016

• 12% turnout reduction achieved through psychological profiling

• $6M budget proved suppression costs less than persuasion

Cambridge Analytica pioneered this psychological approach in 2016, targeting 3.5 million Black voters with Facebook ads designed to discourage turnout. The company called it “deterrence campaigns” in leaked emails, serving content that emphasized Clinton’s “super predator” comments and police reform failures. TikTok’s algorithm provides far more sophisticated targeting capabilities than Facebook offered in 2016, enabling personality-based suppression at unprecedented scale.

The TikTok Suppression Infrastructure

Modern voter suppression operates through TikTok’s “interest signals”—the platform’s method for inferring user psychology from viewing behavior. Campaign data firms purchase this psychological intelligence through TikTok’s advertising API, then serve suppression content to users scoring high on political engagement but low on voting commitment.

The targeting works through behavioral inference rather than explicit political identification. A user who watches videos about student debt, climate activism, and social justice but skips voting registration content gets flagged as “politically engaged, electorally disengaged.” These users receive algorithmic feeds emphasizing political futility: videos about gerrymandering, corporate campaign influence, and electoral college manipulation.

Red Curve Solutions, a GOP digital firm, spent $2.8 million on TikTok suppression campaigns targeting users aged 18-24 in Arizona, Georgia, and Pennsylvania. According to internal presentation slides obtained through a former employee, the targeting criteria focus on users who engage with progressive political content but haven’t shared voting-related videos. The campaign served these users an average of 15 demotivating political videos daily during the final month before voter registration deadlines.

89% – TikTok’s personality prediction accuracy from 45 minutes of viewing

$47M – Combined party spending on voter demobilization in 2024

15-20% – Promised turnout reduction from suppression vendors

The content appears organic—created by influencers and activist accounts, not official campaigns. But spending records show Red Curve and similar firms paying TikTok creators $500-$2,000 per video to produce “authentic” content about electoral disillusionment. The creators often don’t know their content is being algorithmically targeted for suppression purposes.

Cambridge Analytica’s Psychological Foundation

This technique directly descends from Cambridge Analytica’s breakthrough discovery that personality traits predict political behavior more accurately than demographic categories. CA’s research proved that users scoring high on “Neuroticism” and low on “Conscientiousness” could be discouraged from voting through repeated exposure to negative political messaging.

“Digital footprints predict personality traits with 89% accuracy from as few as 45 minutes of viewing data—TikTok’s algorithm surpasses Cambridge Analytica’s Facebook-based profiling, which required 68 likes for 85% accuracy” – Congressional testimony on TikTok’s internal research, 2023

TikTok’s algorithm infers these same personality dimensions from user behavior. The platform’s internal research, referenced in congressional testimony, shows their recommendation system can predict user psychological profiles with 89% accuracy after just 45 minutes of viewing data. This surpasses Cambridge Analytica’s Facebook-based profiling, which required 68 likes for 85% accuracy.

Democratic digital firm Acronym adopted identical suppression tactics by 2022, targeting conservative-leaning young voters with content about political corruption and electoral manipulation. Their 2024 spending on TikTok “demobilization campaigns” reached $1.9 million across six competitive Senate races. Former Acronym staffers confirmed the strategy aims to “reduce turnout among persuadable Republicans rather than convert them.”

Both parties now maintain “suppression creative teams”—content producers who specialize in politically demoralizing videos designed for algorithmic amplification to opponent voters. The teams study TikTok’s engagement patterns to optimize for maximum psychological impact: videos that generate despair and resignation rather than anger and mobilization.

The Bipartisan Suppression Economy

FEC filings reveal $47 million in combined Democratic and Republican spending on “social media voter demobilization” during the 2024 cycle, up from $12 million in 2020. The growth reflects Cambridge Analytica’s validated insight that suppressing opponent turnout costs less than persuading swing voters.

| Capability | Cambridge Analytica (2016) | TikTok Suppression (2024) |

|---|---|---|

| Profiling Speed | 68 Facebook likes for 85% accuracy | 45 minutes viewing for 89% accuracy |

| Targeting Method | Facebook ads with deterrence messaging | Organic content amplified algorithmically |

| Annual Spending | $6M (Trump 2016 digital budget) | $47M (2024 suppression campaigns) |

| Legal Status | Illegal data harvesting scandal | Fully legal through creator payments |

Republican suppression infrastructure centers on three firms: Red Curve Solutions (TikTok specialists, $8.2M revenue 2024), Victory Digital (multi-platform suppression, $11.7M), and Targeted Victory (psychological profiling, $9.3M). These companies employ 23 former Cambridge Analytica staff members, including two senior data scientists who worked on CA’s original deterrence algorithms.

Democratic suppression operations flow through Hawkfish (Mike Bloomberg’s firm, $6.8M on TikTok), ACRONYM ($4.1M), and Precision Strategies ($3.9M). The techniques are identical across parties: psychological profiling leading to algorithmic demobilization. Only the targeted demographics differ—Republicans suppress urban youth and college voters, Democrats suppress rural youth and religious voters.

The suppression vendors openly advertise these capabilities to campaigns. Red Curve’s client presentation promises “15-20% turnout reduction among high-propensity opponent voters” through “strategic algorithmic demobilization.” Hawkfish’s marketing materials reference “psychographic deterrence modeling” and “social platform suppression optimization.”

Regulatory Blindness and Platform Complicity

Post-Cambridge Analytica reforms focused on ad transparency but ignored algorithmic manipulation. Political ads on TikTok require disclosure labels, but suppression campaigns don’t use traditional advertising—they pay creators for organic content, then boost distribution through the platform’s recommendation system. This falls outside advertising regulations while achieving identical targeting outcomes.

TikTok earned $89 million from US political campaigns in 2024, with 67% going to “organic amplification” services that boost content through algorithmic distribution rather than traditional ads. This revenue model incentivizes the platform to maintain suppression capabilities while publicly opposing political manipulation.

Analysis by internal TikTok emails, released during ongoing FTC litigation, shows company executives knew about suppression campaigns by early 2023 but chose not to restrict the targeting capabilities that enable them. One email from a policy director notes: “These campaigns reduce political engagement, which actually serves our goal of decreasing controversial content on the platform.”

“These campaigns reduce political engagement, which actually serves our goal of decreasing controversial content on the platform—Cambridge Analytica’s deterrence strategy now aligns with platform business interests” – TikTok policy director, internal email released in FTC litigation, 2023

The regulatory gap exists because suppression campaigns don’t violate existing rules about false information or voter intimidation. The content is often factually accurate—highlighting real problems with electoral systems and political corruption. The manipulation occurs through algorithmic delivery to psychologically susceptible users, not through false claims.

Detection and Scale for 2026

Voter suppression through TikTok will expand significantly for the 2026 midterms. Both parties are hiring “algorithm strategists” and building “suppression creative teams” specifically for the platform. Combined spending on TikTok demobilization is projected to reach $120 million across all races.

Users can detect suppression targeting by monitoring their political content feeds. If your For You Page consistently shows discouraging political content—videos about electoral futility, corruption, or systemic problems—without balancing content about civic engagement or voting procedures, you may be targeted for suppression. The algorithm learns from your viewing behavior, so engaging with demotivating content increases its frequency.

Campaign finance records provide another detection method. When creators suddenly post multiple videos about political disillusionment during election periods, cross-reference their names with FEC vendor payments. Suppression campaigns pay hundreds of creators simultaneously, creating traceable spending patterns in campaign finance databases.

The Cambridge Analytica precedent shows these techniques will persist regardless of platform policies or regulatory attention. The fundamental insight—that psychological profiling enables voter suppression—transfers across technologies and political systems. TikTok provides more sophisticated targeting than Facebook offered in 2016, making suppression campaigns more effective and harder to detect.

Every major campaign now budgets for suppression alongside persuasion and mobilization. The technique proved its effectiveness in 2024 competitive races, where targeted demographics showed measurably lower turnout compared to non-targeted control groups. Cambridge Analytica’s deterrence strategy has evolved from scandal to standard practice, optimized for social media algorithms that Cambridge Analytica’s founders could never have imagined.